Computer Vision Development for Estimating Trunk Angles

Posted on by

Work-related musculoskeletal disorders have been linked to many physical job risk factors, such as forceful movement, repetitive exertions, awkward posture and vibration. These job risk factors are typically evaluated using ergonomic risk assessment methods or tools. These methods are predominantly self-reporting and observational. Self-reporting methods can be questionnaires, checklists or interviews. Observational methods entail observing pre-defined risk factors using ergonomics checklists during a site visit or on a video recording of the job. The observational methods are widely used by professional ergonomists and safety professionals in the field. The accuracy of the methods, however, is affected by the subjective nature of the evaluations and could potentially lead to biased risk interpretations. As a consequence, inaccurate results of observational ergonomic risk assessments may lead to ineffective interventions for reducing job risk factors for work-related musculoskeletal disorders.

In addition, current assessment methods are incapable of measuring risk over long periods. Representative sampling information over longer periods of time is critical for determining accurate total risk exposure. Computer vision, an AI technology, is a promising tool for conducting risk assessment. Computer vision uses a computer instead of a human observer to identify human body posture, motion and hand activity. This technology has become very popular for classifying postures for ergonomic applications. With the advantages of low-cost, ease of use, and real-time posture assessments, it is likely to reduce burden and cost associated with current ergonomic risk analysis tools.

Researchers have used computer vision to classify lifting postures using dimensions of boxes drawn tightly around the subject, called bounding boxes[i] . Earlier studies have demonstrated lifting monitor algorithms [ii] and posture classification using computer vision extracted features [iii]. A current study by NIOSH researchers and University of Wisconsin-Madison collaborators, is exploring the application of measuring simple features of bounding box dimensions obtained using computer vision to predict the sagittal trunk angle (the center of hips to the center of the shoulders), a predictor of low-back disorders [iv], [v]. This new computer vision-based risk assessment method simplifies measuring trunk angles and quantifies ergonomic risk assessments over long period of times in industry settings.

Researchers have used computer vision to classify lifting postures using dimensions of boxes drawn tightly around the subject, called bounding boxes[i] . Earlier studies have demonstrated lifting monitor algorithms [ii] and posture classification using computer vision extracted features [iii]. A current study by NIOSH researchers and University of Wisconsin-Madison collaborators, is exploring the application of measuring simple features of bounding box dimensions obtained using computer vision to predict the sagittal trunk angle (the center of hips to the center of the shoulders), a predictor of low-back disorders [iv], [v]. This new computer vision-based risk assessment method simplifies measuring trunk angles and quantifies ergonomic risk assessments over long period of times in industry settings.

Trunk angles are not currently part of commonly used lifting assessment tools because they are difficult to measure [vi]. The new method does not require high-precision posture measurements, so it is more tolerable of the visual conditions encountered in the industrial setting.

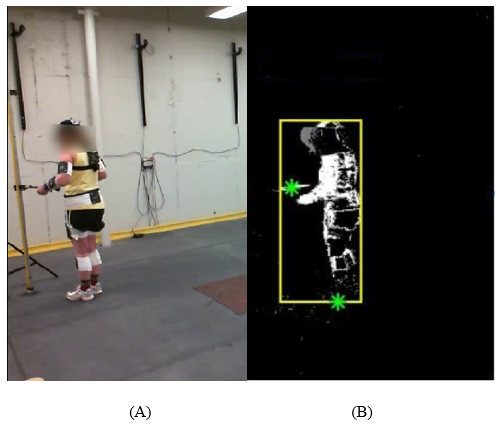

To develop the computer vision-based method, the trunk flexion angle was modeled by a training dataset consisting of 105 computer generated lifting postures with different horizontal and vertical locations of the hands. Researchers drew a bounding box (shown in figure) tightly around the subject for each training-set case, measured the height and width, and recorded the torso angle of each case. Image B shows how the computer vision uses the lifting monitor algorithm to process the video (image A). A rectangular bounding box encloses the subject’s body motion, including the locations of the hands and the ankles. The hands and ankles are identified by green asterisks [vii].

The model was validated using 180 lifting tasks performed by 5 subjects in a laboratory setting. The trunk flexion angles for the lifting tasks were measured by a research grade motion capture system and used as the gold standard for the validation test. The mean absolute difference between predicted and motion capture measured trunk angles was 15.85º, and there was a linear relationship between predicted and measured trunk angles (R2=0.80, p<0.001). The error in measuring trunk angle was 2.52 º.

This study demonstrated the feasibility of predicting trunk flexion angles for lifting tasks in a video recording using computer vision algorithms without a human observer’s input. This computer vision-based lifting risk assessment method may be used as a non-intrusive, automatic and practical risk assessment tool in many workplace settings. The method has the ability to automatically collect risk data over extended periods, which may serve as an efficient way of assessing lifting risks for large scale field studies. Ergonomists may also benefit from the continuous risk information for devising and prioritizing interventions. We plan on refining the computer algorithms for estimating other lifting risk factors such as trunk symmetry angle, and other lifting risk variables used by the revised NIOSH lifting equation.

We would like to hear from you regarding the potential applications of this computer vision-based lifting risk assessment method in your workplace.

Menekse S. Barim, PhD, AEP, is a Research Industrial Engineer in the NIOSH Division of Field Studies and Engineering.

Robert G. Radwin, PhD, is the Duane H. and Dorothy M. Bluemke Professor in the College of Engineering at the University of Wisconsin-Madison.

Ming-Lun (Jack) Lu, PhD, CPE, is a Research Ergonomist in the NIOSH Division of Field Studies and Engineering and Manager of the NIOSH Musculoskeletal Health Cross-Sector Program.

References

[i] Greene, R. L., Lu, M.-L., Barim, M. S., Wang, X., Hayden, M., Hen Hu, Y., & Radwin, R. G. (2019). Estimating Trunk Angles During Lifting Using Computer Vision Bounding Boxes *. Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 63(1), 1128–1129.

[ii] Wang, X., Hu, Y.H., Lu, M.-L., Radwin, R.G. (2019). The accuracy of a 2D video-based lifting monitor, Ergonomics,62(8),1043-1054.

[iii] Greene, R. L., Hu, Y. H., Difranco, N., Wang, X., Lu, M. L., Bao, S., … & Radwin, R. G. (2019). Predicting Sagittal Plane Lifting Postures From Image Bounding Box Dimensions. Human factors, 61(1), 64-77.

[iv] Marras, W. S., Lavender, S. A., Leurgans, S. E., Rajulu, S. L., Allread, S. W. G., Fathallah, F. A., & Ferguson, S. A. (1993). The role of dynamic three-dimensional trunk motion in occupationally-related. Spine, 18(5), 617-628.Patrizi, A., Pennestrì, E., & Valentini, P. P. (2016). Comparison between low-cost marker-less and high-end marker-based motion capture systems for the computer-aided assessment of working ergonomics. Ergonomics, 59(1), 155-162.

[v] Lavender, S. A., Andersson, G. B., Schipplein, O. D., & Fuentes, H. J. (2003). The effects of initial lifting height, load magnitude, and lifting speed on the peak dynamic L5/S1 moments. Int. J. Industrial Ergonomics, 31(1), 51-59.

[vi] Patrizi, A., Pennestri, E., Valentini, P.P (2016). Comparison between low-cost marker-less and high-end marker-based motion capture systems for the computer-aided assessment of working ergonomics, Ergonomics, 2016, pp/ 155-162.

[vii] Greene RL, Lu M-L, Barim MS, et al. Estimating Trunk Angle Kinematics During Lifting Using a Computationally Efficient Computer Vision Method. Human Factors. September 2020. doi:10.1177/0018720820958840

Posted on by